Content

- Jointly distributed random variables

- Joint distribution function | Joint Cumulative probability distribution function | joint probability mass function | joint probability density function

- Examples on Joint distribution

- Independent random variables and joint distribution

- Example of independent joint distribution

- SUMS OF INDEPENDENT RANDOM VARIABLES BY JOINT DISTRIBUTION

- sum of independent exponential random variables

- sum of independent Gamma random variables

- sum of independent exponential random variables

- Sum of independent normal random variable | sum of independent Normal distribution

- Sums of independent Poisson random variables

- Sums of independent binomial random variables

Jointly distributed random variables

The jointly distributed random variables are the random variable more than one with probability jointly distributed for these random variables, in other words in experiments where the different outcome with their common probability is known as jointly distributed random variable or joint distribution, such type of situation occurs frequently while dealing the problems of the chances.

Joint distribution function | Joint Cumulative probability distribution function | joint probability mass function | joint probability density function

For the random variables X and Y the distribution function or joint cumulative distribution function is

where the nature of the joint probability depends on the nature of random variables X and Y either discrete or continuous, and the individual distribution functions for X and Y can be obtained using this joint cumulative distribution function as

similarly for Y as

these individual distribution functions of X and Y are known as Marginal distribution functions when joint distribution is under consideration. These distributions are very helpful for getting the probabilities like

and in addition the joint probability mass function for the random variables X and Y is defined as

the individual probability mass or density functions for X and Y can be obtained with the help of such joint probability mass or density function like in terms of discrete random variables as

and in terms of continuous random variable the joint probability density function will be

where C is any two dimensional plane, and the joint distribution function for continuous random variable will be

the probability density function from this distribution function can be obtained by differentiating

and the marginal probability from the joint probability density function

as

and

with respect to the random variables X and Y respectively

Examples on Joint distribution

- The joint probabilities for the random variables X and Y representing the number of mathematics and statistics books from a set of books which contains 3 mathematics, 4 statistics and 5 physics books if 3 books taken randomly

- Find the joint probability mass function for the sample of families having 15% no child, 20% 1 child, 35% 2 child and 30% 3 child if the family we choose randomly from this sample for child to be Boy or Girl?

The joint probability we will find by using the definition as

and this we can illustrate in the tabular form as follows

- Calculate the probabilities

if for the random variables X and Y the joint probability density function is given by

with the help of definition of joint probability for continuous random variable

and the given joint density function the first probability for the given range will be

in the similar way the probability

and finally

- Find the joint density function for the quotient X/Y of random variables X and Y if their joint probability density function is

To find the probability density function for the function X/Y we first find the joint distribution function then we will differentiate the obtained result,

so by the definition of joint distribution function and given probability density function we have

thus by differentiating this distribution function with respect to a we will get the density function as

where a is within zero to infinity.

Independent random variables and joint distribution

In the joint distribution the probability for two random variable X and Y is said to be independent if

where A and B are the real sets. As already in terms of events we know that the independent random variables are the random variables whose events are independent.

Thus for any values of a and b

and the joint distribution or cumulative distribution function for the independent random variables X and Y will be

if we consider the discrete random variables X and Y then

since

similarly for the continuous random variable also

Example of independent joint distribution

- If for a specific day in a hospital the patients entered are poisson distributed with parameter λ and probability of male patient as p and probability of female patient as (1-p) then show that the number of male patients and female patients entered in the hospital are independent poisson random variables with parameters λp and λ(1-p) ?

consider the number of male and female patients by random variable X and Y then

as X+Y are the total number of patients entered in the hospital which is poisson distributed so

as the probability of male patient is p and female patient is (1-p) so exactly from total fix number are male or female shows binomial probability as

using these two values we will get the above joint probability as

thus probability of male and female patients will be

and

which shows both of them are poisson random variables with the parameters λp and λ(1-p).

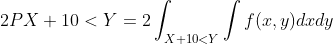

2. find the probability that a person has to wait for more than ten minutes at the meeting for a client as if each client and that person arrives between 12 to 1 pm following uniform distribution.

consider the random variables X and Y to denote the time for that person and client between 12 to 1 so the probability jointly for X and Y will be

calculate

where X,Y and Z are uniform random variable over the interval (0,1).

here the probability will be

for the uniform distribution the density function

for the given range so

SUMS OF INDEPENDENT RANDOM VARIABLES BY JOINT DISTRIBUTION

The sum of independent variables X and Y with the probability density functions as continuous random variables, the cumulative distribution function will be

by differentiating this cumulative distribution function for the probability density function of these independent sums are

by following these two results we will see some continuous random variables and their sum as independent variables

sum of independent uniform random variables

for the random variables X and Y uniformly distributed over the interval (0,1) the probability density function for both of these independent variable is

so for the sum X+Y we have

for any value a lies between zero and one

if we restrict a in between one and two it will be

this gives the triangular shape density function

if we generalize for the n independent uniform random variables 1 to n then their distribution function

by mathematical induction will be

sum of independent Gamma random variables

If we have two independent gamma random variables with their usual density function

then following the density for the sum of independent gamma random variables

this shows the density function for the sum of gamma random variables which are independent

sum of independent exponential random variables

In the similar way as gamma random variable the sum of independent exponential random variables we can obtain density function and distribution function by just specifically assigning values of gamma random variables.

Sum of independent normal random variable | sum of independent Normal distribution

If we have n number of independent normal random variables Xi , i=1,2,3,4….n with respective means μi and variances σ2i then their sum is also normal random variable with the mean as Σμi and variances Σσ2i

We first show the normally distributed independent sum for two normal random variable X with the parameters 0 and σ2 and Y with the parameters 0 and 1, let us find the probability density function for the sum X+Y with

in the joint distribution density function

with the help of definition of density function of normal distribution

thus the density function will be

which is nothing but the density function of a normal distribution with mean 0 and variance (1+σ2) following the same argument we can say

with usual mean and variances. If we take the expansion and observe the sum is normally distributed with the mean as the sum of the respective means and variance as the sum of the respective variances,

thus in the same way the nth sum will be the normally distributed random variable with the mean as Σμi and variances Σσ2i

Sums of independent Poisson random variables

If we have two independent Poisson random variables X and Y with parameters λ1 and λ2 then their sum X+Y is also Poisson random variable or Poisson distributed

since X and Y are Poisson distributed and we can write their sum as the union of disjoint events so

by using the of probability of independent random variables

so we get the sum X+Y is also Poisson distributed with the mean λ1 +λ2

Sums of independent binomial random variables

If we have two independent binomial random variables X and Y with parameters (n,p) and (m, p) then their sum X+Y is also binomial random variable or Binomial distributed with parameter (n+m, p)

let use the probability of the sum with definition of binomial as

which gives

so the sum X+Y is also binomially distributed with parameter (n+m, p).

Conclusion:

The concept of jointly distributed random variables which gives the distribution comparatively for more than one variable in the situation is discussed in addition the basic concept of independent random variable with the help of joint distribution and sum of independent variables with some example of distribution is given with their parameters, if you require further reading go through mentioned books. For more post on mathematics, please click here.

A first course in probability by Sheldon Ross

Schaum’s Outlines of Probability and Statistics

An introduction to probability and statistics by ROHATGI and SALEH

I am DR. Mohammed Mazhar Ul Haque. I have completed my Ph.D. in Mathematics and working as an Assistant professor in Mathematics. Having 12 years of experience in teaching. Having vast knowledge in Pure Mathematics, precisely on Algebra. Having the immense ability of problem design and solving. Capable of Motivating candidates to enhance their performance.

I love to contribute to Lambdageeks to make Mathematics Simple, Interesting & Self Explanatory for beginners as well as experts.