In the probability theory the Chebyshev’s Inequality & central limit theorem deal with the situations where we want to find the probability distribution of sum of large numbers of random variables in approximately normal condition, Before looking the limit theorems we see some of the inequalities, which provides the bounds for the probabilities if the mean and variance is known.

Markov’s inequality

The Markov’s inequality for the random variable X which takes only positive value for a>0 is

to prove this for a>0 consider

Since

now taking expectation of this inequality we get

the reason is

which gives the Markov’s inequality for a>0 as

Chebyshev’s inequality

For the finite mean and variance of random variable X the Chebyshev’s inequality for k>0 is

where sigma and mu represents the variance and mean of random variable, to prove this we use the Markov’s inequality as the non negative random variable

for the value of a as constant square, hence

this equation is equivalent to

as clearly

Examples of Markov’s and Chebyshev’s inequalities :

- If the production of specific item is taken as random variable for the week with mean 50 , find the probability of production exceeding 75 in a week and what would be the probability if the production of a week is between 40 and 60 provided the variance for that week is 25?

Solution: Consider the random variable X for the production of the item for a week then to find the probability of production exceeding 75 we will use Markov’s inequality as

Now the probability for the production in between 40 to 60 with variance 25 we will use Chebyshev’s inequality as

so

this shows the probability for the week if the production is between 40 to 60 is 3/4.

2. Show that the chebyshev’s inequality which provides upper bound to the probability is not particularly nearer to the actual value of the probability.

Solution:

Consider the random variable X is uniformly distributed with mean 5 and variance 25/3 over the interval (0,1) then by the chebyshev’s inequality we can write

but the actual probability will be

which is far from the actual probability likewise if we take the random variable X as normally distributed with mean and variance then Chebyshev’s inequality will be

but the actual probability is

Weak Law of Large Numbers

The weak law for the sequence of random variables will be followed by the result that Chebyshev’s inequality can be used as the tool for proofs for example to prove

if the variance is zero that is the only random variables having variances equal to 0 are those which are constant with probability 1 so by Chebyshev’s inequality for n greater than or equal to 1

as

by the continuity of the probability

which proves the result.

to prove this we assume that variance is also finite for each random variable in the sequence so the expectation and variance

now from the Chebyshev’s inequality the upper bound of the probability as

which for n tending to infinity will be

Central Limit theorem

The central limit theorem is one of the important result in probability theory as it gives the distribution to the sum of large numbers which is approximately normal distribution in addition to the method for finding the approximate probabilities for sums of independent random variables central limit theorem also shows the empirical frequencies of so many natural populations exhibit bell-shaped means normal curves, Before giving the detail explanation of this theorem we use the result

“If the sequence of random variables Z1,Z2,…. have the distribution function and moment generating function as FZn and Mzn then

Central Limit theorem: For the sequence of identically distributed and independent random variables X1,X2,……. each of which having the mean μ and variance σ2 then the distribution of the sum

tends to the standard normal as n tends to infinity for a to be real values

Proof: To prove the result consider the mean as zero and variance as one i.e μ=0 & σ2=1 and the moment generating function for Xi exists and finite valued so the moment generating function for the random variable Xi/√n will be

hene the moment generating function for the sum ΣXi/√n will be

Now let us take L(t)=logM(t)

so

to show the proof we first show

by showing its equivalent form

since

hence this shows the result for the mean zero and variance 1, and this same result follows for the general case also by taking

and for each a we have

Example of the Central Limit theorem

To calculate the distance in light year of a star from the lab of an astronomer, he is using some measuring techniques but because of change in atmosphere each time the distance measured is not exact but with some error so to find the exact distance he plans to observe continuously in a sequence and the average of these distances as the estimated distance, If he consider the values of measurement identically distributed and independent random variable with mean d and variance 4 light year, find the number of measurement to do to obtain the 0.5 error in the estimated and actual value?

Solution: Let us consider the measurements as the independent random variables in sequence X1,X2,…….Xn so by the Central Limit theorem we can write

which is the approximation into standard normal distribution so the probability will be

so to get the accuracy of the measurement at 95 percent the astronomer should measure n* distances where

so from the normal distribution table we can write it as

which says the measurement should be done for 62 number of times, this also can be observed with the help of Chebyshev’s inequality by taking

so the inequality results in

hence for n=16/0.05=320 which gives certainity that there will be only o.5 percent error in the measurement of the distance of the star from the lab of observations.

2. The number of admitted students in engineering course is Poisson distributed with mean 100, it was decided that if the admitted students are 120 or more the teaching will be in two sections otherwise in one section only, what will be the probability that there will be two sections for the course?

Solution: By following the Poisson distribution the exact solution will be

which is obviously not give the particular numerical value, If we consider the random variable X as the students admitted then by the central limit theorem

which can be

which is the numerical value.

3. Calculate the probability that the sum on ten die when rolled is between 30 and 40 including 30 and 40?

Solution: Here considering the die as Xi for ten values of i. the mean and variance will be

thus following the central limit theorem we can write

which is the required probability.

4. For the uniformly distributed independent random variables Xi on the interval (0,1) what will be the approximation of the probability

Solution: From the Unifrom distribution we know that the mean and variance will be

Now using the central limit theorem we can

thus the summation of the random variable will be 14 percent.

5. Find the probability for the evaluator of the exam to give grades will be 25 exams in starting 450 min if there are 50 exams whose grading time is independent with mean 20 min and standard deviation 4 min.

Solution: Consider the time require to grade the exam by the random variable Xi so the random variable X will be

since this task for 25 exam is withing 450 min so

here using the central limit theorem

which is the required probability.

Central Limit theorem for independent random variables

For the sequence which is not identically distributed but having independent random variables X1,X2,……. each of which having the mean μ and variance σ2 provided it satisfies

- each Xi is uniformly bounded

- sum of the variances is infinite, then

Strong Law of Large Numbers

Strong Law of Large numbers is very crucial concept of the probability theory which says that the average of sequence of commonly distributed random variable with probability one will converge to the mean of that same distribution

Statement: For the sequence of identically distributed and independent random variables X1,X2,……. each of which having the finite mean with probability one then

Proof: To prove this consider the mean of each of random variable is zero, and the series

now for this consider power of this as

after taking the expansion of the right hand side terms we have the terms of the form

since these are independents so the mean of these will be

with the help of combination of the pair the expansion of the series now will be

since

so

we get

this suggest the inequality

hence

By the convergence of the series since the probability of each random variable is one so

since

if the mean of each random variable is not equal to zero then with deviation and probability one we can write it as

or

which is required result.

One Sided Chebyshev Inequality

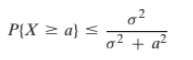

The one sided Chebysheve inequality for the random variable X with mean zero and finite variance if a>0 is

to prove this consider for b>0 let the random variable X as

which gives

so using the Markov’s inequality

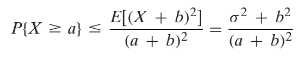

which gives the required inequality. for the mean and variance we can write it as

This further can be written as

Example:

Find the upper bound of the probability that the production of the company which is distributed randomly will at least 120, if the production of this certain company is having mean 100 and variance 400.

Solution:

Using the one sided chebyshev inequaility

so this gives the probability of the production within a week atleast 120 is 1/2, now the bound for this probability will be obtained by using Markov’s inequality

which shows the upper bound for the probability.

Example:

Hundred pairs are taken from two hundred persons having hundred men and hundred women find the upper bound of the probability that at most thirty pair will consist a men and a women.

Solution:

Let the random variable Xi as

so the pair can be expressed as

Since every man can equally likely to be pair with remaining people in which hundred are women so the mean

in the same way if i and j are not equal then

as

hence we have

using the chebyshev inequality

which tells that the possibility of pairing 30 men with women is less than six, thus we can improve the bound by using one sided chebyshev inequality

Chernoff Bound

If the moment generating function already known then

as

in the same way we can write for t<0 as

Thus the Chernoff bound can be define as

this inequality stands for all the values of t either positive or negative.

Chernoff bounds for the standard normal random variable

The Chernoff bounds for the standard normal random variable whose moment generating function

is

so minimizing this inequality and right hand side power terms gives for a>0

and for a<0 it is

Chernoff bounds for the Poisson random variable

The Chernoff bounds for the Poisson random variable whose moment generating function

is

so minimizing this inequality and right hand side power terms gives for a>0

and it would be

Example on Chernoff Bounds

In a game if a player is equally likely to either win or lose the game independent of any past score, find the chernoff bound for the probability

Solution: Let Xi denote the winning of the player then the probability will be

for the sequence of n plays let

so the moment generating function will be

here using the expansions of exponential terms

so we have

now applying the property of moment generating function

This gives the inequality

hence

Conclusion:

The inequalities and limit theorem for the large numbers were discussed and the justifiable examples for the bounds of the probabilities were also taken to get the glimpse of the idea, Also the the help of normal, poisson random variable and moment generating function is taken to demonstrate the concept easily, if you require further reading go through below books or for more Article on Probability, please follow our Mathematics pages.

A first course in probability by Sheldon Ross

Schaum’s Outlines of Probability and Statistics

An introduction to probability and statistics by ROHATGI and SALEH

I am DR. Mohammed Mazhar Ul Haque. I have completed my Ph.D. in Mathematics and working as an Assistant professor in Mathematics. Having 12 years of experience in teaching. Having vast knowledge in Pure Mathematics, precisely on Algebra. Having the immense ability of problem design and solving. Capable of Motivating candidates to enhance their performance.

I love to contribute to Lambdageeks to make Mathematics Simple, Interesting & Self Explanatory for beginners as well as experts.